Correlation

Correlation, Factor Analysis, and Principal Components Analysis

Correlation, Factor Analysis, and Principal Components Analysis are different than the other procedures in DTREG, because they do not generate predictive models. Instead, these procedures are used for exploratory analysis where you are trying to understand the nature and relationship between variables.

Introduction to Correlation

Correlation is a measure of the association between two variables. That is, it indicates if the value of one variable changes reliably in response to changes in the value of the other variable. The correlation coefficient can range from -1.0 to +1.0. A correlation of -1.0 indicates that the value of one variable decreases as the value of the other variable increases. A correlation of +1.0 indicates that when the value of one variable increases, the other variable increases. Positive correlation coefficients less than 1.0 mean that an increasing value of one variable tends to be related to increasing values of the other variable, but the increase is not regular – that is, there may be some cases where an increased value of one variable results in a decreased value of the other variable (or no change). A correlation coefficient of 0.0 means that there is no association between the variables: a positive increase in one variable is not associated with a positive or negative change in the other.

Types of Correlation Coefficients

When used without qualification, “correlation” refers to the linear correlation between two continuous variables, and it is computed using the Pearson Product Moment function. A Pearson correlation coefficient of 1.0 occurs when an increase in value of one variable results in an increase in value of the other variable in a linear fashion. That is, doubling the value of one variable doubles the value of the other variable.

If two variables have an association but the relationship is not linear, then the Pearson correlation coefficient will be less than 1.0 even if there is a perfectly reliable change in one variable as the other changes. The Spearman rank-order correlation coefficient is the most popular method for handling non-linear correlation. Spearman correlation sorts the values being correlated and replaces the values by their order (rank) in the sorted list. So the smallest value is replaced by 1, the second smallest by 2, etc. Correlation is then computed using the rank-orders rather than the original data values. The Spearman correlation coefficient will be 1.0 if a positive change in one variable produces a positive change in the other variable even if the response is not linear.

Most correlation programs can compute correlations only between two continuous variables. Since DTREG allows categorical variables, it must also compute correlations between categorical variables. It’s not too hard to grasp the idea of correlating two categorical variables with dichotomous values such as correlating Sex (male/female) with Outcome (live/die), but it is harder to imagine correlating categorical variables with multiple categories such as Marital Status with State of Residence. However, there are established correlation procedures for handling these cases, and DTREG implements procedures for handling all combinations of correlations between continuous, dichotomous, and general categorical variables. The correlation between two multi-category variables is essentially an ANOVA. These correlations can vary only from 0.0 to 1.0; they cannot be negative.

The Correlation Matrix

If you compute the correlation between n variables, then these correlations can be presented in the form of an n by n matrix such as shown here:

V1 V2 V3 V4 V5 V6

------- ------- ------- ------- ------- -------

V1 1.0000 0.4944 0.7134 -0.1041 0.1141 0.0762

V2 0.4944 1.0000 0.3882 0.0535 -0.0597 0.1423

V3 0.7134 0.3882 1.0000 -0.0247 0.2038 0.0583

V4 -0.1041 0.0535 -0.0247 1.0000 0.6201 0.6353

V5 0.1141 -0.0597 0.2038 0.6201 1.0000 0.4551

V6 0.0762 0.1423 0.0583 0.6353 0.4551 1.0000

Introduction to Factor Analysis and Principal Components Analysis

When you find a set of variables that are highly correlated with each other, it is reasonable to wonder if this mutual association may be due to some common underlying cause. For example, suppose values for the following variables are collected for an incoming college freshman class: High school GPA, IQ, SAT Verbal, SAT Math, Height, Weight, Waist size, and Chest size. A correlation matrix for these variables is likely to show large positive correlations between High school GPA, IQ, and SAT scores. Similarly, Height, Weight, Waist and Chest measurements will probably be positively correlated. So, the question is whether High school GPA, IQ, and SAT scores are related because of some underlying, common factor. The answer, of course, is yes, because they are all measures of intelligence. Similarly, Height, Weight, Waist, and Chest measurements are all related to physical size. So the conclusion is that there are only two underlying factors that are being measured by the eight variables, and these factors are intelligence and physical size. These common factors are sometimes called latent variables. Since “intelligence” is an abstract concept, it cannot be measured directly: instead, proxy measures such as GPA, IQ, etc. are used to estimate the intelligence of an individual.

In the simple example presented above, it’s not too difficult to isolate the pattern of correlations that link the variables in the two groups; but when you have hundreds of variables and there are multiple underlying factors, it is much more difficult to identify the factors and the variables associated with each factor.

The purpose of Factor Analysis is to identify a set of underlying factors that explain the relationships between correlated variables. Generally, there will be fewer underlying factors than variables, so the factor analysis result is simpler than the original set of variables.

Principal Component Analysis is very similar to Factor Analysis, and the two procedures are sometimes confused. Both procedures are built on the same mathematical techniques. Factor Analysis assumes that the relationship (correlation) between variables is due to a set of underlying factors (latent variables) that are being measured by the variables.

Principal Components Analysis is not based on the idea that there are underlying factors that are being measured. It is simply a technique for reducing the number of variables by finding a linear combination of variables that explains the variance in the original variables. Factor analysis is used more often than principal components analysis.

Determining the Number of Factors to Use

In the example above, we concluded that the eight variables were related to two underlying factors, intelligence and physical size. However, the choice of two factors was arbitrary. It is likely that IQ and SAT scores will have higher correlation with each other than with GPA, because GPA is largely affected by motivation and effort. Similarly, weight, waist, and chest size may be measures of heft while height may be something different. So perhaps we should use four factors: (1) IQ, SAT Verbal, SAT Math; (2) GPA; (3) Weight, Waist, and Chest size; (5) Height.

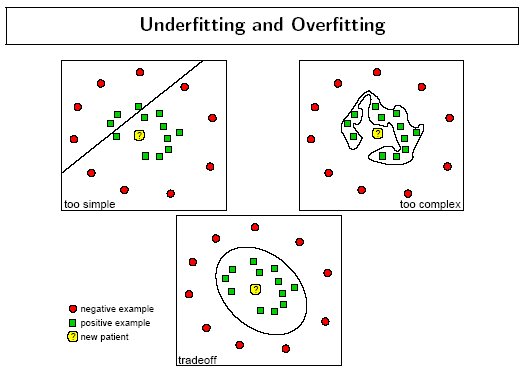

Determining the number of factors to use has been an issue since the beginning of factor analysis. There is no perfect way to determine how many factors to use: there are a number of suggested guidelines, but ultimately it is a judgment call. One of the most useful measures is a chart called a Scree Plot that shows what percentage of the variance is accounted for by each factor. A small scree plot is shown in the analysis report; a larger and prettier one can be seen by clicking Charts/Model Size after finishing an analysis. Here is an example of a Scree Plot:

The horizontal axis shows the number of factors. The vertical axis shows the percent of the overall variance explained by each factor. Notice that there is a sharp drop off after 2 factors. So in this case, it is reasonable to retain two factors. DTREG includes a number of methods for controlling how many factors are used. See the Factor Analysis property page for details.

Output Generated by Factor Analysis

Factor Importance (Eigenvalue) Table

The Factor Importance table shows the relative and cumulative amount of variance explained by each factor. Here is an example of such a table:

============== Factor Importance ==============

Factor Eigenvalue Variance % Cumulative % Scree Plot

------ ---------- ---------- ------------ --------------------

1 1.90099 31.683 31.683 ********************

2 1.68129 28.022 59.705 *****************

3 0.18959 3.160 62.865 **

4 0.02137 0.356 63.221

5 -0.01090 . .

6 -0.20007 . .

Maximum allowed number of factors = 2

Stop when cumulative explained variance = 80%

Minimum allowed eigenvalue = 0.50000

Number of factors retained = 2

This chart lists each factor, its associated eigenvalue, the percent of total variance explained by the factor, and the total cumulative variance explained by all factors up to and including this one. A small scree plot is show on the right.

One popular rule of thumb in determining how many factors to use is to only use factors whose eigenvalues are at least 1.0. However, experience has show that this may exclude useful factors, so a smaller eigenvalue cutoff is recommended.

Table of Communalities

============== Communalities ==============

Initial Final Common Var. % Unique Var. %

------- ------- ------------- -------------

V1 0.5908 0.9285 92.848 7.152

V2 0.3250 0.2554 25.540 74.460

V3 0.5332 0.5693 56.927 43.073

V4 0.5938 0.9234 92.342 7.658

V5 0.4861 0.4449 44.488 55.512

V6 0.4326 0.4608 46.083 53.917

A communality is the percent of variance in a variable that is accounted for by the retained factors. For example, in the table above, about 93% of the variance in V1 is accounted for by the factors, while only 44% of the variance of V5 is accounted for.

Factor Loading Matrix

============== Un-rotated Factor Matrix ==============

Fac1 Fac2

--------- ---------

V1 0.6167 * 0.7404 *

V2 0.3619 0.3527

V3 0.5359 0.5311

V4 0.6727 * -0.6862 *

V5 0.5724 -0.3423

V6 0.5677 -0.3722

------ --------- ---------

Var. 1.901 1.681

The factor loading matrix shows the correlation between each variable and each factor. For example, V1 has a 0.6167 correlation with Factor 1 and a 0.7404 correlation with Factor 2. From the factor matrix shown above, we see that Factor 1 is related most closely to V4 followed by V1. V5 and V6 are also moderately significant variables on Factor 1. Factor 2 is related to V1 and V4. So when trying to interpret the meaning of Factor 2, you should try to figure out the common connection between V1 and V4.

Factor Rotation

============== Rotated Factor Matrix ==============

Rotation method: Varimax

Fac1 Fac2

--------- ---------

V1 -0.0056 0.9636 *

V2 0.0494 0.5030

V3 0.0674 0.7515 *

V4 0.9566 * -0.0911

V5 0.6583 * 0.1072

V6 0.6740 * 0.0813

------ --------- ---------

Var. 1.901 1.681

There are several methods of rotating the factor matrix that make the relationship between the variables and the factors easier to understand. The factor matrix presented above is the result of rotating the factor matrix presented in the previous section. In this case Varimax rotation was used. After a Varimax rotation, some of the factor loadings will be large, and the rest will be close to zero making it easy to see which variables correlate strongly with the factor. Varimax is the most popular rotation method. After performing the Varimax rotation, it is easy to see that Factor 1 is related to variables V4, V5, and V6 whereas Factor 2 is related to variables V1, V2, and V3.

A Varimax rotation is an orthogonal transformation. That means the factor axes remain orthogonal to each other, and the factors are uncorrelated. A Promax rotation relaxes that restriction and allows the rotated axes to be oblique and correlated with each other. When a Promax rotation is done, DTREG displays a table showing the correlations between the rotated factors:

==== Correlation Between Rotated Factor Axes ====

Fac1 Fac2

------- -------

Fac1 1.0000 -0.1400

Fac2 -0.1400 1.0000